This is the second in our 3-part series on the idea of ETI (extra-terrestrial intelligence), and the theories and ideas that astronomers and cosmologists have typically advanced. We're specifically triggered to do this by an

article we referred to yesterday, by Howard Smith in the latest

American Scientist. See

Part I for more on the source, and for background.

Within his area of expertise, Smith makes many valuable points about why he believes that either there is no intelligent life 'out there', or why even if there is it's moot to talk about because we'll never detect it. However, we think he is typical of astronomers who seem to feel no restraint in leaving their own field, which they know about, to speculate very naively about evolution, for reasons we'll discuss below. But let's briefly consider his more robust points about ETI first.

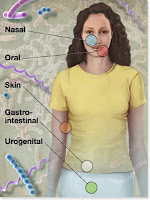

First, he's only talking about 'intelligent beings'. Micro-organisms that can't communicate with us are irrelevant. If there's only 'primitive life' out there, for all intents and purposes we're still alone -- the life he's interested in has to have something equivalent to a radio technology with which to send signals.

That life has to be close enough to Earth to allow a signal to get here, or for ours to get there, before the universe ends. It has to be within the 'cosmic horizon', the constraint being how far light can travel within the age of the universe. Since the universe is expanding and distances are getting larger, this further restricts the possible planets from which signals can reach us. And distant signals of course represent not life as it exists now, but life as it existed perhaps eons ago when the signals were sent, so we may never catch up with ETI in anything like real time. But this means that that distant life would have evolved a lot sooner than life on Earth.

And that distant planet has to be stable, that is its host star must be a stable size, with stable enough radiative output to have given life time to evolve, and of the right age -- not too young that life didn't have time to evolve, and not too old that the star's luminosity, which increases with time, isn't too great to have overwhelmed and destroyed the planet (many of these criteria might in fact be called the Goldilocks criteria).

The planet's orbit must be just right vis à vis its star, planetary mass must be "massive enough to hold an atmosphere, but not so massive that plate tectonics are inhibited, because that would reduce geological processing and its crucial consequences for life."

And the planet must "contain elements needed for complex molecules (carbon, for example), but it also needs elements that are perhaps not necessary for making life itself but that are essential for the environment that can host intelligent life: silicon and iron, for example, to enable plate tectonics, and a magnetic field to shield the planet's surface from lethal charged winds from its star."

But now, Smith works out some--indeed many--of his calculations from what seems to be an evolutionary point of view. Life has to start and then evolve to become intelligent. Here, let's take for granted that we know what 'intelligent' means.

In a nutshell, Smith asks how many planets have liquid water. How many have ample carbon. How many would be in systems where gravitational and other forces tilt the planet at an angle so it has seasonality of climate. How many have physical and gravitational properties that will lead to an atmosphere. How many have radioactive cores or other means by which they have plate tectonics to shift around the hardened crust and renew or recycle needed elements for life. How many would have the billions of years needed for intelligence to evolve, which has to do with the age of their solar systems. How many would circle around only one star rather than two, so their gravitational status was stable and so they did not have long cycles of being too hot or too cold. In how many could DNA evolve and function, leading to intelligent beings. And so on.

It all sounds sensible and of course the questions are relevant. But what they ask in essence is: how many planets are just like earth and their life like earth-life? But that is far off the central question--are we alone in the universe?

The criteria he uses are post hoc--they say this is how earth-life evolved, and so this must be how other life has to evolve, so let's see how likely it is that just the same conditions exist elsewhere.

Playing the infinity game we discussed in Part I, one could say that if the universe is infinite, then the same conditions must exist (infinitely many times!) in the universe. But if the universe is finite--even no matter how big and how numerous its stars--then things become different. That's because if you multiply guesstimates of the probabilities of all the just-like-earth conditions, the net probability of all of them being true will become infinitesimally small. Then, even if there is a planet that's just-like-earth, the likelihood of being contemporaneous (in the galactic communication sense) with us would be so small as to lead to the conclusion--his conclusion--that we better take care here because we're the only here there is.

Of course there is no way to know how different among these earthy planets conditions could be and still be compatible with life, and again the issue is probabiltity of earthy viability times the number of existing space-objects that

could in principle, host life. If the product is greater than one, then the statistical expectation is that there is at least one such planet somewhere.

On the other hand, this is exceedingly naive evolutionary thinking. Astronomers should stick to their telescopes. The core facts of evolution as a

process are divergence from shared origin and adaptive change. There are also some other basic aspects of earthly life that Smith doesn't mention, but that are the main points in our book

The Mermaid's Tale, such as the sequestering of interacting but semi-independent units, combinatorial information transfer (signaling), and so on.

There is no reason that life elsewhere has to 'evolve' in the way we understand from what happened here on earth, but let's not quibble about that. Even then, there is no prior reason to think life must be based on water, or carbon (some have suggested that life, even earth like life, could be based on silicon, for example), or be restricted to particular temperatures (after all, we have Inuit and Saharan nomads, even under our own conditions), or that evolution would have a DNA or any other information-inheritance basis--lamarckian inheritance of some form might evolve on some planet somewhere, for example. There is no reason evolution would take 4 billion years to become 'intelligent', nor that our kind of intelligence or ideas about communication would evolve.

Even if we accept the two core premises, there is simply no way to work out how or how long intelligence would take. In a sense the whole point of evolution is that it works under the conditions it finds, and would work in its own way under what to us here would be harsher conditions, or more variable conditions, than Smith considers to preclude life. We can't rule out non-earthlike chemical bases for life, other than for earthlike 'life'. Natural selection can work in ways we may not know if, if inheritance is different, for example. It's not that hard to think of other ways.

If life does exist elsewhere does it have to be 'intelligent' in our sense to count? Is the ETI question really about earth replicate life--that would and would want to communicate with the likes of us in the way we (currently) do it?

We think his article is very interesting and useful, but we also think it reveals the cardboard caricature of life and evolution that (as we repeatedly harp in MT) is so widely prevalent outside of a rather limited range even of biologists.

In addition to these ideas, there is the possibility that even if ETI exists, it is so aggressively acquisitive of resources and so on that it destroys itself quickly, reducing the likelihood that two such sources would exist at similar enough times and stages to be able to detect each other.

There are many reasons that ETI may not exist at all, or if it does that we'll never detect it. The conclusion that we're all alone--for all practical purposes--seems rather sound. Even if one were to suppose that English-speaking life existed, say, a thousand light-years away (that is, nearby in space terms), it would take more than 2000 years to exchange a single message by electromagnetic means (actually more, because of the delay in reading and responding, and the rapid expansion of the universe), assuming neither our nor their life did not destroy itself in the interim (or theirs had not ended before their message got here). 2000 years is the time since the Roman Empire. How long would it take even to find out, to within a reasonable accuracy, just

where the ETs were (and they us) so that travel could be planned or messages accurately directed. So even if somebody does claim to have discovered ETI in their telescopic data, don't let NASA talk you into voting for a big budget increase. Indeed, why allow NASA even the expensive sport of SETI, when lots of us here in the US don't even have health care?

So, we're with Smith in saying: let's take care of what we have, instead of yearning for companionship we can't provide for each other!

There are a couple of other fascinating sides to this story that we'll pursue in Part III.