We continue our musing on the nature of the 'science' in what is so proudly self-proclaimed as 'social science'? The issues are subtle, and it is curious that we as a society--our professors, pundits, op-ed writers, pop-sci writers, and their ilk, and the advisors and advisees in social policy and politics--seem so sure there is such a thing. And, indeed, we don't have to pound on the thoroughly desrving-it economists whose predictions after eons of funded research can't do much better than coin-flipping, to make our point.

We described in Part I of this mini-series the tradition of views of social theorists of various stripes as 'social Darwinists' and 'social Lamarckians', for those who felt that inequity was a built-in law of Nature that should be nurtured by social policy, or who felt that human achievement could lead to equity, and that that principle of Nature should be nurtured by social policy.

All these thinkers would have said that they were doing science--that is, were describing fundamental truths of the material world. But how did they know what they thought they knew? Did they 'prove' their assertions?

Social Darwinists assert that inequity is not only inevitable and unavoidable, but is the mechanism by which societies compete to succeed (in wealth or power or whatever), and is therefore good. This doesn't mean that social Darwinists are personally cruel people, but that they are sanguine about inequity as being good for society and unavoidable even if some people suffer. But how can one prove that social inequity is inevitable and what kind of 'law' is it that would assert so? What counts as 'inequity'? How can one know? The fact that inequity exists in successfully competing societies (and in downtrodden ones as well) does not imply inevitability, and 'good' is totally a judgment--and of course by definition not good for all.

Is it that if there are random effects on resources, even if each person has the same probability of getting resources, by chance some just won't, and that in the next year or generation they'll be drawing from a position of relative strength or weakness, which will probabilistically make the differences greater? If so, what kind of 'law' is that--based on probability alone, not on anything to do with resources themselves?

Social Darwinism has caused, or has been used to justify, enormous horrors visited on large numbers of people. But what about its supposed opposite, social Lamarckians (a term, but by no means an idea, that we may have invented)? One might believe that learning and improved social environments, based on sharing and fairness, can lead to an equitable society without competitive winners and losers. People have comparable potential (unless manifestly handicapped by disease) that just needs a chance to flourish. Communist societies believed (or, at least, claimed to believe) in this idea--in society's evolution and even in agricultural evolution (called Lysenkoism, a topic too big to go into here). But how could one ever prove that true social equality (let's not worry about how it's defined) is possible, much less inevitably achievable, even if a semblance of it exists here and there?

There is in either view precious little theory that is of the usual kind one finds in science. Even defining 'equality' is probably a hopeless task. Is being a basketball player equal to being an accountant or trucker? Even Darwin's own theory on which social Darwinism rests, basically argues that if some have an advantage in an environment they'll do better, which in a way verges on tautology and in any case is not inevitable--despite his and others' implications to the contrary.

Biology has subsequently developed respectable theory of life, based on biochemistry and genetics, but while that theory may account for different disease susceptibility or hair color, it is only stretched, and thinly so, to culture and social inequality by assumptive extrapolation of genetics. The fact that someone may feed on the ideology of competition, in the sense of organismal evolution, does not in any way prove that the same applies to society. Even if competition does clearly seem to exist. Likewise, the fact that one can imagine societal equality does not in any way prove that there is a physical basis for such a view. And sharing clearly also exists. In some societies, sharing is relatively more important, or reported by the members of the society, than in others.

Genes must affect individual proclivities (whatever that means), but obviously the cultural attributes of a group, which can change much more rapidly than its genetic makeup, are several levels of removal from individual genes.

So ideas about the evolution, or genetic-behavioral nature, of society and culture are far less supported by real evidence than the purveyors of such ideas typically acknowledge. Still, if we're material beings, and not guided by truly spiritual 'forces', there must be some relationships, no?

Speaking of the latter, of course materialist views of the world are very threatening to the idea that free will exists, and this is even more of a problem for people holding strongly religious views, one of many reasons some religious people strongly oppose Darwinian evolution. Another question that raises is how a purely spiritual something-or-other (like soul or God's will) can make a difference in the material world without itself being material--and hence subject to scientific analysis.

But what is 'free' will, and is whether it exists even a legitimate or determinable empirical question? One view is that we only think we're making choices, an illusion, when really it's all our neurons doing that so that, when we finally know enough about each neuron etc., we will be able to predict one's every move, emotion, or decision.

On the other hand, there may be so many layers of material causation, each involving at least some probabilistic elements that, even in principle, we could not predict individual decisions from knowledge of neurons per se.

In the latter case, culture is yet many levels of interaction and causation further removed from individuals' neurons, and moreso from their genes. If so, and this seems likely true, then culture can be an entirely material phenomenon that cannot be understood by genetic or neuroscience reductionism. This is not something those convinced of a deterministic evolutionary world like to acknowledge, and not something that yields much confidence in how to develop a sound theory of culture and its evolution.

Biology hasn't solved this causal problem because we know the truth is mixed and even that it is difficult to tell what is 'genetic' in this context. Being a social parasite like a criminal or 'welfare queen' might be consistent with many genetic determinants but how does one decide if that is a defective trait? Indeed, being a legal social parasite, like an hedge-fund manager (pick your own particular favorite) might reflect the same genotypes. These issues are not clear-cut at all.

Nowadays we couch things in terms of 'science', we accept very poorly supported pop-sci and Just-so stories by psychologists, op-ed writers, and that ilk, but how different are these claims from those of scripture, shamans, or other smooth talkers and demogogues?

Where, if anywhere, is there any science in this? If this is the material world, why can't science answer the questions? If after billions of research dollars spent, society is every bit as screwed up in these kinds of ways as in the past, why do we still even have departments of sociology, psychology, economics (or anthropology)?

These are serious questions in an age of widespread molecular deterministic beliefs, because these beliefs haven't led to a seriously useful theory of the 'laws' of culture and society, yet from a materialistic point of view something of that sort must exist, if only we knew how to find it.

Monday, October 31, 2011

Friday, October 28, 2011

Triumph of the Darwinian method

By

Ken Weiss

In our recent spoof posts about Australopithecus erotimanis, we tried to make the point of how challenging it is to infer why traits found today evolved or, even more problematic, to infer this from fossils. This raises several fundamental issues, and they are ones that Charles Darwin addressed even before Origin of Species: explaining the nature of function from observed traits, explaining the fitness effects (for natural selection) of traits past and present, and explaining relationships among species (taxonomy).

Darwin didn't have all the answers (and neither do we), but he did introduce a method into biology that he applied to all of these questions (and so do we). A very fine book, now about 50 years old, by Michael Ghiselin, called The Triumph of the Darwinian Method, goes in great detail using Darwin's own work, to show how transformative and powerful this was, and how Darwin used it in all the many contexts of his writing and thinking. Of course, legions of books and articles have adopted and reflected this approach to the present day.

Darwin didn't proclaim a method and then use it, but adopted and adapted the 'hypothetico-deductive' scientific method that had been developed over the previous century or so, for sciences based on concepts of 'laws of nature', as a way of identifying those laws and testing data in connection with them. It was a framework for evaluating the empirical world.

To do this, to a considerable extent Darwin tried to show that life followed 'laws'. So he didn't invent the method, but he did show more definitively than his predecessors, how it could be applied to life as well as planets, ideal gases, and so on. The key was the assumption that life was a law-like--he compared natural selection to gravity, for example--descent from a common origin.

Given those assumptions, he interpreted both species formation and variation, adaptive functions of traits in fossils and in comparative morphology, taxonomy, biogeography, ecology, and so on. From barnacles to earthworms, orchids and facial expression, he applied the selective, divergence framework.

There were mistakes, and one could go on about them, because some were serious. To us, the most serious is that he took too strongly the assumed 'laws', so that his explanations are sometimes too rigid and in some instances too teleological or even near-Lamarckian--directional evolution, for example, by which a fossil or barnacle was explained as being on the way to some state.

When we (that is, anthropologists who know something) try to explain fossils, like the Australopithecines we have just had some fun being satirical with, we have to try to understand all the central issues--taxonomy, function, adaptation. Given the many problems involved in reconstructions, the conplexity of genetic control, the elusiveness and non law-like aspects of natural selection (versus genetic drift, for example), the incompleteness of data, and much more, it is no surprise that conclusions have to be tentative and assertions circumspect.

One of the main axes that we grind on MT is that we feel that there are many pressures of diverse kinds that encourage us not to be sufficiently circumspect, and to claim far too much. This gets attention, and grants and promotion, but it can mislead science, when people take distorted claims as true and try to build on them.

Still, while it's fun to have fun with fossil claims, and fun to lampoon the hypersphere of our media-driven science circus, the problems are real and respectably challenging. Perhaps much more than the public--or even many professionals--actually realize.

Darwin, by the way, was often far more circumspect than is the usual case today. He wanted to be right, of course, and didn't always exercise or express enough caution. But he did frequently acknowledged where he was speculating, and so on.

Darwin didn't have all the answers (and neither do we), but he did introduce a method into biology that he applied to all of these questions (and so do we). A very fine book, now about 50 years old, by Michael Ghiselin, called The Triumph of the Darwinian Method, goes in great detail using Darwin's own work, to show how transformative and powerful this was, and how Darwin used it in all the many contexts of his writing and thinking. Of course, legions of books and articles have adopted and reflected this approach to the present day.

Darwin didn't proclaim a method and then use it, but adopted and adapted the 'hypothetico-deductive' scientific method that had been developed over the previous century or so, for sciences based on concepts of 'laws of nature', as a way of identifying those laws and testing data in connection with them. It was a framework for evaluating the empirical world.

To do this, to a considerable extent Darwin tried to show that life followed 'laws'. So he didn't invent the method, but he did show more definitively than his predecessors, how it could be applied to life as well as planets, ideal gases, and so on. The key was the assumption that life was a law-like--he compared natural selection to gravity, for example--descent from a common origin.

Given those assumptions, he interpreted both species formation and variation, adaptive functions of traits in fossils and in comparative morphology, taxonomy, biogeography, ecology, and so on. From barnacles to earthworms, orchids and facial expression, he applied the selective, divergence framework.

There were mistakes, and one could go on about them, because some were serious. To us, the most serious is that he took too strongly the assumed 'laws', so that his explanations are sometimes too rigid and in some instances too teleological or even near-Lamarckian--directional evolution, for example, by which a fossil or barnacle was explained as being on the way to some state.

When we (that is, anthropologists who know something) try to explain fossils, like the Australopithecines we have just had some fun being satirical with, we have to try to understand all the central issues--taxonomy, function, adaptation. Given the many problems involved in reconstructions, the conplexity of genetic control, the elusiveness and non law-like aspects of natural selection (versus genetic drift, for example), the incompleteness of data, and much more, it is no surprise that conclusions have to be tentative and assertions circumspect.

One of the main axes that we grind on MT is that we feel that there are many pressures of diverse kinds that encourage us not to be sufficiently circumspect, and to claim far too much. This gets attention, and grants and promotion, but it can mislead science, when people take distorted claims as true and try to build on them.

Still, while it's fun to have fun with fossil claims, and fun to lampoon the hypersphere of our media-driven science circus, the problems are real and respectably challenging. Perhaps much more than the public--or even many professionals--actually realize.

Darwin, by the way, was often far more circumspect than is the usual case today. He wanted to be right, of course, and didn't always exercise or express enough caution. But he did frequently acknowledged where he was speculating, and so on.

Thursday, October 27, 2011

More on the True Story of the evolution of the human hand

By

Ken Weiss

The other day, we posted our clearly rigorous explanation of the new human ancestral fossil find, originally named Australopithecus sediba but properly renamed by us Australopithecus erotimanis. We'll not go over old ground here (and some may have been too squeamish with our explanation anyway to have it repeated here). Suffice it to say that one Commenter seemed unconvinced.

The issue surrounds the delicate opposable thumb-endowed hand of A. erotimanis. How did this evolve? Now we must admit that this Commenter (who hides behind the moniker 'occamseraser') can do circles around us in terms of actual knowledge of human paleontology. He (or she) clearly knows the fossil record bone by bone, which we unhappily must confess that we don't.

The issue surrounds the delicate opposable thumb-endowed hand of A. erotimanis. How did this evolve? Now we must admit that this Commenter (who hides behind the moniker 'occamseraser') can do circles around us in terms of actual knowledge of human paleontology. He (or she) clearly knows the fossil record bone by bone, which we unhappily must confess that we don't.

To flesh out our view, we thought making it even more convincing, we wrote a Comment of our own, a short bit of fictionalized history, that might be called "The misadventures of P'Qeeb" (since Jean Auel makes millions doing that, a point made by Holly, we felt we ought to be allowed to relay our views in that way, too). Our tale tried to bring to life some of the on-the-ground realities of our past evolution. We offered a rather, we think, totally convincing scenario for the evolution of the dextrous hand. But 'occam' suggested that perhaps A. erotimanii may have evolved their thumbs for other functions than we suggested.

We're not stupid (despite occasional appearances), and had thought of other explanations on our own, thank you very much. A thumb could be of great advantage for hitch-hiking, but no hub-caps were found at the site, so that seemed like a rather weak hypothesis. Perhaps the most obvious alternative to an origin by natural selection for a kind of sexual behavioral trait, is that the opposable thumb was evolved for picking one's nose. But, convenient as that may doubtlessly be, how could that confer a fitness advantage--enabling the picker to out-reproduce those with less manicured (so to speak) nares? Indeed, it could be argued that such behavior would actually have a negative fitness effect: who wants to do what needs to be done with someone too habituated to digital nostrillary grooming? Would they not be put off instead?

No, we don't want to be picky in what we'll accept as an adequate evolutionary explanation. But then our correspondent 'occam' suggested that the A. erotimanii may have evolved to be able to thumb their noses at their crude Alley-Ooopish paranthropine contemporaries (see our story, in the Comments, to get at taste of what they were like, the brutes).

We wrote, calmly, to defend our own ideas, by noting that they may not have had noses to thumb! Clearly 'occam' was wrong. But then in a riposte he snidely just said "who nose?", a cheap pun if there ever was one, and not one we can take lying down!

So herewith is exhibit A: the noseless Australopithecine, easily found on the web it is so obvious. Now, if you look very hard you can see that, yes, they do have a nose---of sorts. It might be pickable, but it certainly isn't thumb-able! No, occam, we're sorry (not!), but your idea simply won't fly.

In case you need a reminder, here is exhibit B, a real nose (also courtesy of the web)! This one's worth thumbing, if you see any disgusting brutes around.

Now, again, we can flesh out our argument against occam's idea in another way. How on earth would there be an advantage for the erotimanii to have a thumb or dextrous hand so they could pick (so to speak) fights with paranthropines? How could that have had any but negative effects on fitness. A picking explanation might gross out the paranthropines, but thumbing noses (even if they had noses to thumb) would have provoked the paranthropines to dangerous assaults.

Think again (and, yes, seriously if that's possible) about what we're debating when it comes to Darwinian explanations: we need to offer scenarios that are directly relevant to reproductive success. If picking one's nose, or hitchhiking, or even thumbing at wild hominids are true aspects of behavior, even if very satisfying, they are not obviously connected to reproductive success.

In thinking about this we had momentarily wondered if the dextrous hand were designed for holding broad leaves to use when blowing one's nose. But it's not clear, given the nature of Australopithecine noses, that that would not be just an uncontrollable mess. So we dropped such nose-related explanations. And we suggest that occam do the same.

Compare that to our explanation, and unless one really wants some actual evidence that has sound epistemology about it, our original explanation, immediately tied to reproductive success, is the clear winner. If one admits that there really isn't serious data on which to construct a story, well, we should acknowledge it openly. And, indeed, keep in mind that no matter how squeamish (or puritanical) you may be, the real world of animals involves many squeamish things and there's no reason they cannot be important in Nature's cold and very un-squeamish, judgments.

The bottom line is that it is not at all easy to understand how things evolved way back when in the days before blog-posts. Incredibly, that is true even when you have the bodies of the actual individuals who did the evolving! Getting correct explanations, and knowing that you have them, are great challenges that even the best of science needs to take seriously.

The issue surrounds the delicate opposable thumb-endowed hand of A. erotimanis. How did this evolve? Now we must admit that this Commenter (who hides behind the moniker 'occamseraser') can do circles around us in terms of actual knowledge of human paleontology. He (or she) clearly knows the fossil record bone by bone, which we unhappily must confess that we don't.

The issue surrounds the delicate opposable thumb-endowed hand of A. erotimanis. How did this evolve? Now we must admit that this Commenter (who hides behind the moniker 'occamseraser') can do circles around us in terms of actual knowledge of human paleontology. He (or she) clearly knows the fossil record bone by bone, which we unhappily must confess that we don't.To flesh out our view, we thought making it even more convincing, we wrote a Comment of our own, a short bit of fictionalized history, that might be called "The misadventures of P'Qeeb" (since Jean Auel makes millions doing that, a point made by Holly, we felt we ought to be allowed to relay our views in that way, too). Our tale tried to bring to life some of the on-the-ground realities of our past evolution. We offered a rather, we think, totally convincing scenario for the evolution of the dextrous hand. But 'occam' suggested that perhaps A. erotimanii may have evolved their thumbs for other functions than we suggested.

We're not stupid (despite occasional appearances), and had thought of other explanations on our own, thank you very much. A thumb could be of great advantage for hitch-hiking, but no hub-caps were found at the site, so that seemed like a rather weak hypothesis. Perhaps the most obvious alternative to an origin by natural selection for a kind of sexual behavioral trait, is that the opposable thumb was evolved for picking one's nose. But, convenient as that may doubtlessly be, how could that confer a fitness advantage--enabling the picker to out-reproduce those with less manicured (so to speak) nares? Indeed, it could be argued that such behavior would actually have a negative fitness effect: who wants to do what needs to be done with someone too habituated to digital nostrillary grooming? Would they not be put off instead?

No, we don't want to be picky in what we'll accept as an adequate evolutionary explanation. But then our correspondent 'occam' suggested that the A. erotimanii may have evolved to be able to thumb their noses at their crude Alley-Ooopish paranthropine contemporaries (see our story, in the Comments, to get at taste of what they were like, the brutes).

We wrote, calmly, to defend our own ideas, by noting that they may not have had noses to thumb! Clearly 'occam' was wrong. But then in a riposte he snidely just said "who nose?", a cheap pun if there ever was one, and not one we can take lying down!

So herewith is exhibit A: the noseless Australopithecine, easily found on the web it is so obvious. Now, if you look very hard you can see that, yes, they do have a nose---of sorts. It might be pickable, but it certainly isn't thumb-able! No, occam, we're sorry (not!), but your idea simply won't fly.

Now, again, we can flesh out our argument against occam's idea in another way. How on earth would there be an advantage for the erotimanii to have a thumb or dextrous hand so they could pick (so to speak) fights with paranthropines? How could that have had any but negative effects on fitness. A picking explanation might gross out the paranthropines, but thumbing noses (even if they had noses to thumb) would have provoked the paranthropines to dangerous assaults.

Think again (and, yes, seriously if that's possible) about what we're debating when it comes to Darwinian explanations: we need to offer scenarios that are directly relevant to reproductive success. If picking one's nose, or hitchhiking, or even thumbing at wild hominids are true aspects of behavior, even if very satisfying, they are not obviously connected to reproductive success.

In thinking about this we had momentarily wondered if the dextrous hand were designed for holding broad leaves to use when blowing one's nose. But it's not clear, given the nature of Australopithecine noses, that that would not be just an uncontrollable mess. So we dropped such nose-related explanations. And we suggest that occam do the same.

Compare that to our explanation, and unless one really wants some actual evidence that has sound epistemology about it, our original explanation, immediately tied to reproductive success, is the clear winner. If one admits that there really isn't serious data on which to construct a story, well, we should acknowledge it openly. And, indeed, keep in mind that no matter how squeamish (or puritanical) you may be, the real world of animals involves many squeamish things and there's no reason they cannot be important in Nature's cold and very un-squeamish, judgments.

The bottom line is that it is not at all easy to understand how things evolved way back when in the days before blog-posts. Incredibly, that is true even when you have the bodies of the actual individuals who did the evolving! Getting correct explanations, and knowing that you have them, are great challenges that even the best of science needs to take seriously.

Wednesday, October 26, 2011

"Let them eat cake!" (Wild applause) Why? Part I

By

Ken Weiss

In this short series, we are asking what kind of real rather than self-proclaimed 'science' can there be in social science, and why don't we already have it, if science is so powerful a way to understand the world?

"The poor are starving, Mum. They have no bread!"

"Well, then, let them eat cake!"

Marie Antoinette (whose family did, in fact, have cake and bread, too) may never have said those harsh words in about 1775. The cold quip reflecting a harsh attitude towards the needy had been uttered before, but the point sticks when applied to the royal insensitivity towards social inequity in France.

In the 1790s, and in a spirit of similar kindliness, Malthus concluded his book about population and the inevitability of suffering from resource shortage, by saying that this is God's way of spurring mankind to exertion, and hence salvation. The 'Reverend' Malthus sneered at the hopelessness of utopian thinking--and its associated anti-aristrocratic policies--then dominating France. Forget about bread, 'they' (not Malthus or his friends and family) can't eat cake because there won't be enough to go around.

This view was justified by Herbert Spencer directly, and indirectly in effect by Charles Darwin and Alfred Wallace (all of whom had bread) who adopted Malthus' view as a reflection of the 'law' of natural selection, of competitive death and mayhem, and this view was and has since often been, used to justify social inequity as an unavoidable (and hence acceptable) aspect of life.

Of course, that such sentiments are cruel, insensitive, and self-serving does not make them false. There is symmetry in these things because at roughly the same time, Marxists held that inequities inevitably lead to conflicts that gradually would lead to a stable equitable society, materialist utopian of a somewhat different kind from the Eutopianism of the 18th century, that was perhaps less reliant, in principle, upon conflict. Religion is "the opiate of the people," he proclaimed with equal insensitivity to the multitudes for whom religion provide the only hope and solace in their lives (when they hadn't any comfort food). Likewise, the fact that this opposite view of egalitarianism is insensitive in that way, doesn't make it false, either.

What goes around comes around. Fifty years ago, in 1961, right here in the good ol' USA, similarly elitist, arrogant justification of social inequity were voiced, by a Presidential candidate no less, as reflected in this famous Herblock cartoon in the Washington Post (for readers of tender age, that's Barry Goldwater, 5-term US Senator, and Republican presidential candidate in 1964; the homeless family, as always, were too numerous to name).

The Reagan-Thatcher era, which came 20 years later, revitalized the justifications of inequality, using images such as [black] Cadillac-driving welfare queens, to emotionalize their reasons for eating cake while others hadn't bread. Of course, there were and are abusers of social systems. The difference is that the abusers of the welfare system's entitlements have somewhat different social power, not to mention impact, than the abusers of the legal entitlements to wealth.

Of course, this attitude is not a thing of the past. At recent debates of current Presidential candidates, it has been said that the poor are poor because of their own failings, essentially because they don't try hard enough (wild applause). They don't have medical care? Well, then, let them die in the street! (more wild applause).

Similar views-of-convenience apply to people who question the usefulness of international development aid. Some of these people, often social scientists, believe in development, but just don't think current policies will achieve their goals. But, one can wonder, when and where is that itself a cover for a cold view that we don't want our taxes to help people of the wrong religion/color/location who haven't pulled themselves up by their bootstraps so that they, too, can have 96" flat-screen televisions and 3 cars (the fact that they don't have boots, much less bootstraps, gets somewhat less mention).

Now our view on this kind of social egotism is clear. But it is not really relevant to MT, a blog purportedly to be about science. The scientific relevance is clear, but in subtle ways that blur the kinds of polarized political uses, often by demogogues, that serve the convenient ends of getting elected without necessarily having actual policies that would justify it (which all political parties are prone to).

But what kind of science is this--if it is science at all? The authors, regardless of their views, were thinking of themselves as scientists. That is, they saw what they believed to be the truth of the material world, none of them relying on received truth from scriptures. They felt, or acted as if they felt, in their own minds that they were saying things that were not only profoundly true, but which reflected the irrefutable, unavoidable laws of Nature. But does the scientist's view of the world inform his or her interpretation of the science, or is her or his view of the world informed by the science? There was arrogance of privilege on both sides, both sides were led by people who ate cake (even Marx, though he was rather impoverished, because he had a Sugar Daddy in Friedrich Engels).

If the harshest defenses of competitive determinism were 'social Darwinists' as early such views were characterized, the harshest defense of equity could be called 'social Lamarckians'. In the next installment, we'll explain what we mean, as we search for meaning, relevance....and any actual 'science' in this arena of human thought.

"The poor are starving, Mum. They have no bread!"

"Well, then, let them eat cake!"

Marie Antoinette (whose family did, in fact, have cake and bread, too) may never have said those harsh words in about 1775. The cold quip reflecting a harsh attitude towards the needy had been uttered before, but the point sticks when applied to the royal insensitivity towards social inequity in France.

In the 1790s, and in a spirit of similar kindliness, Malthus concluded his book about population and the inevitability of suffering from resource shortage, by saying that this is God's way of spurring mankind to exertion, and hence salvation. The 'Reverend' Malthus sneered at the hopelessness of utopian thinking--and its associated anti-aristrocratic policies--then dominating France. Forget about bread, 'they' (not Malthus or his friends and family) can't eat cake because there won't be enough to go around.

This view was justified by Herbert Spencer directly, and indirectly in effect by Charles Darwin and Alfred Wallace (all of whom had bread) who adopted Malthus' view as a reflection of the 'law' of natural selection, of competitive death and mayhem, and this view was and has since often been, used to justify social inequity as an unavoidable (and hence acceptable) aspect of life.

Of course, that such sentiments are cruel, insensitive, and self-serving does not make them false. There is symmetry in these things because at roughly the same time, Marxists held that inequities inevitably lead to conflicts that gradually would lead to a stable equitable society, materialist utopian of a somewhat different kind from the Eutopianism of the 18th century, that was perhaps less reliant, in principle, upon conflict. Religion is "the opiate of the people," he proclaimed with equal insensitivity to the multitudes for whom religion provide the only hope and solace in their lives (when they hadn't any comfort food). Likewise, the fact that this opposite view of egalitarianism is insensitive in that way, doesn't make it false, either.

What goes around comes around. Fifty years ago, in 1961, right here in the good ol' USA, similarly elitist, arrogant justification of social inequity were voiced, by a Presidential candidate no less, as reflected in this famous Herblock cartoon in the Washington Post (for readers of tender age, that's Barry Goldwater, 5-term US Senator, and Republican presidential candidate in 1964; the homeless family, as always, were too numerous to name).

The Reagan-Thatcher era, which came 20 years later, revitalized the justifications of inequality, using images such as [black] Cadillac-driving welfare queens, to emotionalize their reasons for eating cake while others hadn't bread. Of course, there were and are abusers of social systems. The difference is that the abusers of the welfare system's entitlements have somewhat different social power, not to mention impact, than the abusers of the legal entitlements to wealth.

Of course, this attitude is not a thing of the past. At recent debates of current Presidential candidates, it has been said that the poor are poor because of their own failings, essentially because they don't try hard enough (wild applause). They don't have medical care? Well, then, let them die in the street! (more wild applause).

Similar views-of-convenience apply to people who question the usefulness of international development aid. Some of these people, often social scientists, believe in development, but just don't think current policies will achieve their goals. But, one can wonder, when and where is that itself a cover for a cold view that we don't want our taxes to help people of the wrong religion/color/location who haven't pulled themselves up by their bootstraps so that they, too, can have 96" flat-screen televisions and 3 cars (the fact that they don't have boots, much less bootstraps, gets somewhat less mention).

Now our view on this kind of social egotism is clear. But it is not really relevant to MT, a blog purportedly to be about science. The scientific relevance is clear, but in subtle ways that blur the kinds of polarized political uses, often by demogogues, that serve the convenient ends of getting elected without necessarily having actual policies that would justify it (which all political parties are prone to).

But what kind of science is this--if it is science at all? The authors, regardless of their views, were thinking of themselves as scientists. That is, they saw what they believed to be the truth of the material world, none of them relying on received truth from scriptures. They felt, or acted as if they felt, in their own minds that they were saying things that were not only profoundly true, but which reflected the irrefutable, unavoidable laws of Nature. But does the scientist's view of the world inform his or her interpretation of the science, or is her or his view of the world informed by the science? There was arrogance of privilege on both sides, both sides were led by people who ate cake (even Marx, though he was rather impoverished, because he had a Sugar Daddy in Friedrich Engels).

If the harshest defenses of competitive determinism were 'social Darwinists' as early such views were characterized, the harshest defense of equity could be called 'social Lamarckians'. In the next installment, we'll explain what we mean, as we search for meaning, relevance....and any actual 'science' in this arena of human thought.

Tuesday, October 25, 2011

Australopithecus erotimanis, and the evolution of the human hand

By

Ken Weiss

WARNING: The following post contains adult content!

From the very beginning of formal taxonomy in biology, genus and species names have been assigned partly as descriptions of important aspects of a species. Hence, Homo sapiens refers to our species' purported wisdom. The underlying idea is that one correctly understands important key functions that characterize the species. Linnaeus did not have 'evolution' as a framework with which to make such judgments, but we do. And we should use that framework!

However, sometimes, in the rush to publish in the leading tabloids (in this case, Science magazine), a name is too hastily chosen. That applies clearly to the recently ballyhooed 1.9+ million year old South African human ancestor. Substantial fossil remains of two individuals, a male and a female, were found and seemed clearly to be contemporaneous. Many features suggested that they could be marketed as a Revolutionary! revision to our entire understanding of human origins.

Now, too often in paleoanthropology there is little substantial evidence for such a dramatic consequences, similar to the pinnate carnage known as the War of Jenkins' Ear between Britain and Spain in 1739. But not in this case here! In this case, the specimens were dubbed Australopithecus sediba (See the special issue of Science, Sept 9, 2011 [subscription required]), and there are many traits, some more dramatic than others, by which to argue that it is Really (this time) important.

We haven't the space to outline all these features, but although we are amateurs at paleontology (for expertise, we fortunately have Holly the Amazing Dunsworth as part of our MT team from time to time), we feel qualified to comment. One of the most remarkable features of A. sediba is its long, delicate digits on its obviously dextrous hand, and in particular its long and clearly useful thumb. But useful for what?

One who is rooted, or rutted, in classical human paleontology, and thinks that tool use is what Made us Human, the obvious inference is: a dextrous hand for tool use! There is, however, a tiny problem: no tools were found in the site.

One who is rooted, or rutted, in classical human paleontology, and thinks that tool use is what Made us Human, the obvious inference is: a dextrous hand for tool use! There is, however, a tiny problem: no tools were found in the site.Now this can be hand-waived away in defense of the explanatory hypothesis that is derived from classical adaptive thinking. Wooden tools, like stems chimps strip and use to ferret out termites, wouldn't preserve, and at that early stage of our evolution, stone pebbles might have been used--or even made--but would be perhaps sparse and if not remodeled by the creatures, unrecognizable. Or perhaps these creatures hadn't carried their tools to the site. There may indeed be perfectly reasonable explanations for why no tools were found, but one must at least admit that the absence of tools does not constitute evidence for the tool hypothesis.

Still, if you believe there must be a functionally adaptive explanation for absolutely everything, and you are committed to the current framework of explanations about our ancestry, then tool-use is the obvious one. How occasional use of pebbles led to higher reproductive success--which, remember, is what adaptive explanations must convincingly show--is somewhat less than obvious. Also, it is the female specimen whose hand is best preserved, though the male is inferred (from one finger bone!) to have been similarly handy. But females don't throw pebbles to gather berries!

A satisfying explanation

So how solid or even credible is the tool-use explanation? Could there be better scenarios? We think so, and we believe our idea is as scientifically valid as the tired old one of tool use.

It is obvious upon looking at the fossil hand, that its most likely purpose was, not to mince words about it, masturbation. Just look at the hand itself and its reach position (figure 2). Think about it: deft and masterly self-satisfying would yield heightened sexuality, indeed of keeping one's self aroused at all times, ready for the Real Thing whenever the opportunity might arise. Unlike having to wait for prey to amble by, one could take one's evolutionary future in one's own hands--and use one's tool in a better way, one might say.

Being in a dreamy state is a lot less likely to provoke lethal strife within the population nor "Not tonight, I'm too tired" syndromes, compared to the high-stress life of hunting giraffes (much less rabbits) or trying to bring down berries, by throwing chunky stones at them. Our laid-back scenario does not require fabricating stories of how rock-tossing indirectly got you a mate, because pervasive arousal would be much more closely connected to reproductive coupling, a way of coming rapidly to the important climax: immediate evolutionary success.

Indeed, and here is a key part of our explanation, the same fitness advantage would have applied to both the males and the females. If both parties were at anticipatory states more of the time, fitness-related activity would have occurred even more frequently than it does now, if you can imagine that, and quickly led to our own very existence as a be-thumbed if not bewildered species.

Supporting our hypothesis, vestiges of the original use are still around, as for example the frequency with which football and baseball players grab themselves before each play. Of course, humans seem subsequently and unfairly to have evolved to be less gender-symmetric in this regard. But our explanation is far better than the tired stone-axe story-telling with which we're so familiar. For this reason, we suggest the new nomenclature for our ancient ancestor: Australopithecus erotimanis.

Now, you may think our scenario is simply silly and not at all credible. But is it? By what criterion would you make such a judgment? Indeed, even being silly wouldn't make it false. And, while you may view the standard Man the Hunter explanation as highly plausible, being plausible doesn't make it true. Nor when you get right down to it, is the evidence for the stone-age hypothesis any better than the evidence for our hypothesis.

Indeed, if you think carefully about it, even the presence of some worked pebbles would not count as evidence that hands evolved for tool use. The dextrous hand could have been an exaptation, that is, a trait evolved in some other context, and then later was co-opted for a new function--in this case, the flexible hand, once evolved for one use, could then be used to make and throw tools. What we have explained here is the earlier function that made the hand available in that way.

Don't laugh or sneer, because this is actually a not-so-silly point about the science, or lack of science, involved in so much of human paleontology. It's a field in which committed belief in the need for specific and usually simple adaptive scenarios, using a subjective, culture-specific sense of what is 'plausible', determines what gets into the literature and the text-books (though our idea might have a better chance with National Geographic).

Will anthropology ever become a more seriously rigorous science, with at least an appropriate level of circumspection? It's something to ponder.

Monday, October 24, 2011

We had a bone to pick

By

Ken Weiss

Here's the BBC report of a story relatively out of our realm of expertise (that is, we're totally ignorant in this area). An archeological find that was discovered 30 years ago, and just reported in Science, has been dated, and casts some doubt on a struggling theory of the age of human habitation of the Americas, and the apparent subsequent disappearance of many mammals, like mastodons.

It was once thought that 'we' (that is, Native Americans) got here around 12,000 years go. Shortly thereafter, many large animal species disappeared. People using a particular projectile style called Clovis points were argued by some to have hunted mastodons and other large species to extinction. Others argued that we couldn't have driven such species to extinction, and it must have been some other kind of ecological changes that were responsible.

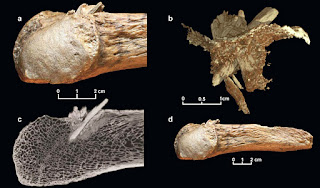

Accumulating evidence is that humans got here somewhat earlier than had been thought, but it was still questionable what happened to the Big Game species. The latest evidence dates a mastodon killed (or at least wounded) by a human-made projectile to an early time and suggests that humans did, at least quickly learn to hunt these beasts, before the appearance of Clovis-style tools.

So, we arrived and bye-bye mastodons! Now, humans had been in the Old World for millennia upon millennia, and it's no surprise that those who could manage in the arctic climate, work their way across the Bering land bridge (presumably in part by hunting migrating game) knew how to hunt the ground-dwelling species they found here. They had to have eaten something, after all, even in the winter!

The interesting thing to us, who are not archeologists and haven't been involved in debates about timing, Clovis, and so on, is whether this find just shows that we hunted big things like mastodons, or whether we were responsible for their total demise. Or whether climate or other changes (related to those that produced the Bering land bridge?) were in the main responsible.

Let's assume humans are guilty. How can this be? How can the relatively sparsely distributed hunting bands at that time (and there's no evidence for anything more dense and sophisticated than such groups) have tracked down and killed off all of several species? After all, the ancestors and contemporaries of the New World settlers didn't exterminate all large land mammals in the Old World. And many species in the New World (bison, deer, and others) were doing very well for long time after our first appearance. Bison disappeared, current wisdom has it, only when the Indians gained horses from atop which to slaughter on a large scale.

Could this be the phenomenon of new immigrants doing in species that had not adapted to them as predators? If so, what was different about that, since clearly there were also predators in the New World, so it wasn't Shangri La for mastodons. Or were mastodons too, well, mammoth for sabre tooth tigers to mess with? This new find shows earlier evidence of human predation but does it change these questions themselves? Presumably the professionals (not us) will have things to say about this.

Recent studies have strengthened the case that the makers of Clovis projectile points were not the first people to occupy the Americas. If hunting by humans was responsible for the megafauna extinction at the end of the Pleistocene, hunting pressures must have begun millennia before Clovis.

|

| Mastodon rib with the embedded bone projectile point. (A) Closeup view. (B) Reconstruction showing the bone point with the broken tip. The thin layer represents the exterior of the rib. (C) CT x-ray showing the long shaft of the point from the exterior to the interior of the rib. (D) The entire rib fragment with the embedded bone projectile point. (From Science, subscription required.) |

It was once thought that 'we' (that is, Native Americans) got here around 12,000 years go. Shortly thereafter, many large animal species disappeared. People using a particular projectile style called Clovis points were argued by some to have hunted mastodons and other large species to extinction. Others argued that we couldn't have driven such species to extinction, and it must have been some other kind of ecological changes that were responsible.

Accumulating evidence is that humans got here somewhat earlier than had been thought, but it was still questionable what happened to the Big Game species. The latest evidence dates a mastodon killed (or at least wounded) by a human-made projectile to an early time and suggests that humans did, at least quickly learn to hunt these beasts, before the appearance of Clovis-style tools.

So, we arrived and bye-bye mastodons! Now, humans had been in the Old World for millennia upon millennia, and it's no surprise that those who could manage in the arctic climate, work their way across the Bering land bridge (presumably in part by hunting migrating game) knew how to hunt the ground-dwelling species they found here. They had to have eaten something, after all, even in the winter!

The interesting thing to us, who are not archeologists and haven't been involved in debates about timing, Clovis, and so on, is whether this find just shows that we hunted big things like mastodons, or whether we were responsible for their total demise. Or whether climate or other changes (related to those that produced the Bering land bridge?) were in the main responsible.

Let's assume humans are guilty. How can this be? How can the relatively sparsely distributed hunting bands at that time (and there's no evidence for anything more dense and sophisticated than such groups) have tracked down and killed off all of several species? After all, the ancestors and contemporaries of the New World settlers didn't exterminate all large land mammals in the Old World. And many species in the New World (bison, deer, and others) were doing very well for long time after our first appearance. Bison disappeared, current wisdom has it, only when the Indians gained horses from atop which to slaughter on a large scale.

Could this be the phenomenon of new immigrants doing in species that had not adapted to them as predators? If so, what was different about that, since clearly there were also predators in the New World, so it wasn't Shangri La for mastodons. Or were mastodons too, well, mammoth for sabre tooth tigers to mess with? This new find shows earlier evidence of human predation but does it change these questions themselves? Presumably the professionals (not us) will have things to say about this.

Friday, October 21, 2011

Identifying candidate genes; theory and practice

So, we, along with collaborators here at Penn State, and other institutions, happen to be working on a project to map genes associated with craniofacial morphology in mice and baboons. Yes, you can laugh, given all we've said about the problems with looking for genes for traits, that we too are involved in genomewide mapping! But, genes are involved in morphology, and mice and baboons do differ, perhaps even systematically (by lineage), so, in theory, it's not a futile search. And, we're hoping that all our skepticism about how these kinds of studies are usually done can point us toward more useful ways to think about the problem--not to enumerate genes but to document or better understand complex genetic causal architecture. In theory.

We're at the point now where traits have been measured on about 1200 mice, and these mice have been genotyped at thousands of variable nucleotide marker sites across the genome, the statistics have been run, 'lod' scores generated, and we're ready to identify candidate genes associated with these traits.

The lod scores -- measures of the probability that a genetic marker, a variable locus along a chromosome, is near to a gene that contributes to variation in a trait -- can point to fairly wide or fairly narrow intervals on a chromosome. The scores of interest are those that are statistically significant, or 'suggestive', that is, almost statistically significant. Some intervals indicated by statistically interesting marker sites are in gene-rich regions, some in gene-poor regions of their respective chromosomes, so some are easier to mine than others.

What we do with each chromosomal interval is to identify all the genes along it, and then hunt for data on how each gene has been characterized. We are doing this manually, but it can be, and often is automated, with a program that looks for certain kinds of genes, in specified gene families, for example. We are choosing to do this by hand because we are trying to reduce the preconceived assumptions with which we approach the problem. Our goal is to collect expression and function data about each gene in every interval. Yes, that's a lot of genes, but we know that most genes are pleiotropic, expressed in many tissues, with multiple functions, most genes have not been fully characterized, and we don't want to assume things a priori, such as that a given class of gene is what we're looking for.

But this is quite problematic.

Let's take an interval we slogged through yesterday. It's one that mapping of several different traits yielded significant lod scores for, so potentially of greater interest than the intervals that were only tagged by one trait. It may, for example, reflect multiple traits that have correlated genetic causation during the development of the head. Anyway, this interval, on mouse chromosome 8, includes 95 identified genes, and a few identified micro RNAs (DNA coding for short RNA molecules that affect the expression of other genes). In fact, and not unusually, very little of the non-coding DNA in this region has been characterized, which is problematic itself, since some of that is surely involved in gene regulation, and who's to say that the variation we're looking at is not due to gene regulation.

Of these 95 genes, we can't find expression data for 49 of them. Over half. So, are they expressed in the anatomical region relevant to the head traits that mapped to this area? We don't know. It's a major task to study the expression of even one gene in mice, much less a host of genes. Apparently no functional data are available for 15 of these genes, and of the rest for which function has been characterized, many are assumed to have a given function just because its DNA sequence is similar to a gene whose function is known. Many others are characterized as "may be involved in" such and such. And, a handful have been identified to be involved in a specific function, but expression data show that they are expressed in other tissues or regions of the body -- this seems to be particularly true for 'spermatogenesis' genes, which tend to show up everywhere.* Olfactory (odor) receptors as well.

So, given the inconsistencies, vagaries, and incompleteness of the data, how are we supposed to go about choosing candidate genes? One way is the Drunk Under the Lamp-post approach (looking for lost keys where there's light even if that's not where they were dropped): to grab a gene we already know about, or narrow our search to genes in families that make some kind of biological sense. That's looking for things we already know and making the comforting assumption they must be the right ones. This will clearly work sometimes, and may be better than totally random guessing. But, such an approach of convenience perforce ignores many plausible candidates, both that have been characterized, and those that have not. So, even when genomic databases are complete (if that day ever arrives -- but keep in mind that different patterns of gene expression are associated with different environments, and thus that characterization may never be complete; reminding us of Wolpert's caution about yet another knock-out mouse with no apparent phenotype; "But have you taken it to the opera?") this approach may not identify all actual candidates.

And this is all true even if we believe we're looking for single genes 'for' our traits. What about when we throw in the kinds of complexity we are always going on about here on MT? It's fair to say that most gene mapping studies don't result in confirmed genes for traits, or a few are found but usually with relatively minor contribution relative to that of other, unidentified genes. See most GWAS, e.g. And if we had access to unpublished GWAS results, this would be even more convincing.

So, while we do believe that genes underlie traits in some collective aggregate ways, and that in theory they are identifiable, current methods, and the current state of (in)completeness of the databases leave us more convinced than ever that identifying genes 'for' traits is an uphill slog.

-----------------------------------------------------------------------------------------------------------------------

We're at the point now where traits have been measured on about 1200 mice, and these mice have been genotyped at thousands of variable nucleotide marker sites across the genome, the statistics have been run, 'lod' scores generated, and we're ready to identify candidate genes associated with these traits.

The lod scores -- measures of the probability that a genetic marker, a variable locus along a chromosome, is near to a gene that contributes to variation in a trait -- can point to fairly wide or fairly narrow intervals on a chromosome. The scores of interest are those that are statistically significant, or 'suggestive', that is, almost statistically significant. Some intervals indicated by statistically interesting marker sites are in gene-rich regions, some in gene-poor regions of their respective chromosomes, so some are easier to mine than others.

What we do with each chromosomal interval is to identify all the genes along it, and then hunt for data on how each gene has been characterized. We are doing this manually, but it can be, and often is automated, with a program that looks for certain kinds of genes, in specified gene families, for example. We are choosing to do this by hand because we are trying to reduce the preconceived assumptions with which we approach the problem. Our goal is to collect expression and function data about each gene in every interval. Yes, that's a lot of genes, but we know that most genes are pleiotropic, expressed in many tissues, with multiple functions, most genes have not been fully characterized, and we don't want to assume things a priori, such as that a given class of gene is what we're looking for.

But this is quite problematic.

Let's take an interval we slogged through yesterday. It's one that mapping of several different traits yielded significant lod scores for, so potentially of greater interest than the intervals that were only tagged by one trait. It may, for example, reflect multiple traits that have correlated genetic causation during the development of the head. Anyway, this interval, on mouse chromosome 8, includes 95 identified genes, and a few identified micro RNAs (DNA coding for short RNA molecules that affect the expression of other genes). In fact, and not unusually, very little of the non-coding DNA in this region has been characterized, which is problematic itself, since some of that is surely involved in gene regulation, and who's to say that the variation we're looking at is not due to gene regulation.

Of these 95 genes, we can't find expression data for 49 of them. Over half. So, are they expressed in the anatomical region relevant to the head traits that mapped to this area? We don't know. It's a major task to study the expression of even one gene in mice, much less a host of genes. Apparently no functional data are available for 15 of these genes, and of the rest for which function has been characterized, many are assumed to have a given function just because its DNA sequence is similar to a gene whose function is known. Many others are characterized as "may be involved in" such and such. And, a handful have been identified to be involved in a specific function, but expression data show that they are expressed in other tissues or regions of the body -- this seems to be particularly true for 'spermatogenesis' genes, which tend to show up everywhere.* Olfactory (odor) receptors as well.

So, given the inconsistencies, vagaries, and incompleteness of the data, how are we supposed to go about choosing candidate genes? One way is the Drunk Under the Lamp-post approach (looking for lost keys where there's light even if that's not where they were dropped): to grab a gene we already know about, or narrow our search to genes in families that make some kind of biological sense. That's looking for things we already know and making the comforting assumption they must be the right ones. This will clearly work sometimes, and may be better than totally random guessing. But, such an approach of convenience perforce ignores many plausible candidates, both that have been characterized, and those that have not. So, even when genomic databases are complete (if that day ever arrives -- but keep in mind that different patterns of gene expression are associated with different environments, and thus that characterization may never be complete; reminding us of Wolpert's caution about yet another knock-out mouse with no apparent phenotype; "But have you taken it to the opera?") this approach may not identify all actual candidates.

And this is all true even if we believe we're looking for single genes 'for' our traits. What about when we throw in the kinds of complexity we are always going on about here on MT? It's fair to say that most gene mapping studies don't result in confirmed genes for traits, or a few are found but usually with relatively minor contribution relative to that of other, unidentified genes. See most GWAS, e.g. And if we had access to unpublished GWAS results, this would be even more convincing.

So, while we do believe that genes underlie traits in some collective aggregate ways, and that in theory they are identifiable, current methods, and the current state of (in)completeness of the databases leave us more convinced than ever that identifying genes 'for' traits is an uphill slog.

-----------------------------------------------------------------------------------------------------------------------

* Here's a section from an in situ experiment on a mouse embryo, taken from the extensive gene expression database, GenePaint. The darker areas are where the gene in question is expressed. In this case, the strongest expression can be seen in the brain, the pituitary gland, the lungs, the whiskers, the growing sections of the digits, and there is expression in other tissues as well. The function of this gene is characterized as, "Probably plays an important role in spermatogenesis."

Thursday, October 20, 2011

"The rhythm of existing things"

By

Ken Weiss

Here are some idle thoughts triggered by a chance discovery.

Modern evolutionary science is credited, in almost all textbooks, as having grown from a general change of worldview. The old, static view that went back to the Bible and to the worldviews of ancient Greece and Rome, was displaced by a process-based view of nature. Rather than a series of ad hoc events dictated by God, or the idea that the world was static, we realized that things changed over time.

In the early 19th century, the geologist Charles Lyell, following on some recent British predecessors, advanced the 'uniformitarian' theory of geology, displacing ad hoc, catastrophist kinds of views of the geological processes like the building of island chains, lifting and erosion of mountains, change in coastlines, and so on. Rather than sudden events, or events ordained by God, forces we can see today can be extrapolated back into the dark depths of history. Individual events are unique, but the process is the same, and we can extrapolate it into the future, too.

Darwin was profoundly affected by Lyell and if any one thing characterizes Darwin's contribution to the world it was the application of uniformitarianism to life: life was an historical process, not a series of sudden creation events. This view of slow, continuity was absolutely fundamental to Darwin's thinking, as he stressed time and time again.

The idea--nowadays the assumption--that processes here today were perforce operating in the past, has been considered to be one of the founding legacies of the 19th century science largely due to Lyell and Darwin and many other less prominent figures.

But how accurate is that view? In around 170 AD, the Roman Emperor Marcus Aurelius, in his Meditations, was musing about the nature of life.

It cannot but carry on the rhythm of existing things. It had to be. The context of Aurelius' thought had to do with keeping human affairs during one's own lifetime in perspective. We're not classicists and aren't able to mine the classical literature for other such musings, though it's not surprising that most basic thoughts were raised by those sage ancestors. But the idea of history as a steady stream seemed obvious to Marcus Aurelius, as it seems obvious to science today. Indeed, this may be the more remarkable because we often blame the stodgy Middle Ages on fundamentalistic Biblical Christianity. But ideas of uniformitarianism were not new. Given that Christian scholars knew very well the deep reaches of human history, it is rather surprising to us that uniformitarianism had so patchy a history and that the 19th century awakening to it seemed, as told by subsequent historians at least, so transforming.

Treatments we're aware of (the Wikipedia entry for 'uniformitarianism', e.g.) credit this concept to the 18th century when the term itself was coined. They do not go back to the ancients, and indeed, it was only in reading Marcus Aurelius for his general philosophy of life that we stumbled inadvertently across his version.

Evolutionary ideas, crude and terse, were widespread if not dogma to the Islamic scholars of the middle ages, as we've mentioned in previous posts. So the interesting question is the way new facts being discovered, such as collections of specimens from global travelers, somehow led to the revival of these ancient ideas at a time when we -- that is, Darwin -- had the methodological framework to make the ideas more rigorous. For every thing there is a season, or a cycle of seasons.

Yes, when it comes to human ideas, there's nothing new under the sun.....and indeed that quote is itself from Ecclesiastes:

What has been will be again,

what has been done will be done again;

there is nothing new under the sun.

Which antedates Marcus Aurelius by quite some time.

Of course, we can't let ourselves get carried away by uniformitarianism. The important point is the belief in general that Nature is orderly: it has properties or 'laws' by which it works, and the idea is that these are universal and unchanging. Gravity doesn't take days off, and wind and water always erode rock.

On the other hand, what makes life the way it is, is specifically the unique events of mutation and adaptation. It is only by becoming different that life evolves. Humans are the result of continuing, fundamental processes, but we are the result of unique events involving those processes. Worms and oaks are not heading towards a human state.

Indeed, what is unique under the sun, is life itself! Uniformitarianism says that the geological processes have continuity. Mountains don't arise spontaneously out of a momentary blip in physical processes. But life did arise that way, as far as we know.

So we need to recognize the importance of continuity, but also of difference.

Modern evolutionary science is credited, in almost all textbooks, as having grown from a general change of worldview. The old, static view that went back to the Bible and to the worldviews of ancient Greece and Rome, was displaced by a process-based view of nature. Rather than a series of ad hoc events dictated by God, or the idea that the world was static, we realized that things changed over time.

|

| Charles Lyell |

Darwin was profoundly affected by Lyell and if any one thing characterizes Darwin's contribution to the world it was the application of uniformitarianism to life: life was an historical process, not a series of sudden creation events. This view of slow, continuity was absolutely fundamental to Darwin's thinking, as he stressed time and time again.

The idea--nowadays the assumption--that processes here today were perforce operating in the past, has been considered to be one of the founding legacies of the 19th century science largely due to Lyell and Darwin and many other less prominent figures.

But how accurate is that view? In around 170 AD, the Roman Emperor Marcus Aurelius, in his Meditations, was musing about the nature of life.

Pass in review the far-off things of the past and its succession of sovranties without number. Thou canst look forward and see the future also. For it will most surely be of the same character, and it cannot but carry on the rhythm of existing things. Consequently it is all one, whether we witness human life for forty years or ten thousand. For what more shalt thou see?

|

| Marcus Aurelius |

Treatments we're aware of (the Wikipedia entry for 'uniformitarianism', e.g.) credit this concept to the 18th century when the term itself was coined. They do not go back to the ancients, and indeed, it was only in reading Marcus Aurelius for his general philosophy of life that we stumbled inadvertently across his version.

Evolutionary ideas, crude and terse, were widespread if not dogma to the Islamic scholars of the middle ages, as we've mentioned in previous posts. So the interesting question is the way new facts being discovered, such as collections of specimens from global travelers, somehow led to the revival of these ancient ideas at a time when we -- that is, Darwin -- had the methodological framework to make the ideas more rigorous. For every thing there is a season, or a cycle of seasons.

Yes, when it comes to human ideas, there's nothing new under the sun.....and indeed that quote is itself from Ecclesiastes:

What has been will be again,

what has been done will be done again;

there is nothing new under the sun.

Which antedates Marcus Aurelius by quite some time.

Of course, we can't let ourselves get carried away by uniformitarianism. The important point is the belief in general that Nature is orderly: it has properties or 'laws' by which it works, and the idea is that these are universal and unchanging. Gravity doesn't take days off, and wind and water always erode rock.

On the other hand, what makes life the way it is, is specifically the unique events of mutation and adaptation. It is only by becoming different that life evolves. Humans are the result of continuing, fundamental processes, but we are the result of unique events involving those processes. Worms and oaks are not heading towards a human state.

Indeed, what is unique under the sun, is life itself! Uniformitarianism says that the geological processes have continuity. Mountains don't arise spontaneously out of a momentary blip in physical processes. But life did arise that way, as far as we know.

So we need to recognize the importance of continuity, but also of difference.

Wednesday, October 19, 2011

Hollywood Stars

By

Ken Weiss

We here on MT regularly criticize, or even lampoon, studies that we think are trivial or over-sold or over-priced. Often this involves 'studies' that seem to have very little serious scientific value beyond curiosity, which can sometimes border on circus (such as digging up Tycho Brahe to get his DNA, or sequencing a 115 year old woman to see what genes 'made' her live that long, or desperately seeking to send men to Mars with butterfly nets to catch Martian life).

But these kinds of studies, not to mention huge mainlining studies like many GWAS and related expensive, exhaustive studies are all over the news. This is not just because the investigators are so quick to brag about their work and claim its high importance, because eventually the wolf-crying would wear too thin. The studies also sell. Print and visual media people may not always be deep thinkers, and may be unduly gullible when talking to professors, but they know that there are readers eager to eat up the stories they write.

So the fault, Dear Brutus, may be in ourselves. Are we just negative spoil-sports who simply wan to take all the fun out of science? In many ways, the answer is Yes. Science, real science that is, is a technically difficult attempt to understand Nature. If universities and journalism worked as they should (from this perspective), there would be a lot less of this science-marketing and perhaps better science. Hoopla and its rewards alter what people think of doing or believe they can be funded to do.

Of course, lots of really good science is being done along with the chaff, but the chaff is too expensive and too integrated into what people in universities, and media people in the real world, are pressured for many different reasons (not least being to earn a living) to do.

Of course, nobody pays any serious attention to grumps like us, even if our points about the science itself were actually sound, as we at least believe them to be. So we and MT (except for Holly's posts) are at worst harmless and perhaps at most, laughable. On the other hand, we do have a suggestion to make that would remedy both the problem of costly overblown trivial science, and our own perhaps overblown criticism:

Of course, nobody pays any serious attention to grumps like us, even if our points about the science itself were actually sound, as we at least believe them to be. So we and MT (except for Holly's posts) are at worst harmless and perhaps at most, laughable. On the other hand, we do have a suggestion to make that would remedy both the problem of costly overblown trivial science, and our own perhaps overblown criticism:

We need to establish a category of Entertainment Science, things with trivial, man-bites-dog human-interest, minimal theoretical value, and great telegenic content, and where the profit motive is legitimate rather than corrupting. Government, being duly prudent, should be banned from funding any such science. Instead, there are deeper pockets elsewhere, and people with the appropriate experience, desires, and expertise: Entertainment Science should be paid for by Hollywood.

But these kinds of studies, not to mention huge mainlining studies like many GWAS and related expensive, exhaustive studies are all over the news. This is not just because the investigators are so quick to brag about their work and claim its high importance, because eventually the wolf-crying would wear too thin. The studies also sell. Print and visual media people may not always be deep thinkers, and may be unduly gullible when talking to professors, but they know that there are readers eager to eat up the stories they write.

So the fault, Dear Brutus, may be in ourselves. Are we just negative spoil-sports who simply wan to take all the fun out of science? In many ways, the answer is Yes. Science, real science that is, is a technically difficult attempt to understand Nature. If universities and journalism worked as they should (from this perspective), there would be a lot less of this science-marketing and perhaps better science. Hoopla and its rewards alter what people think of doing or believe they can be funded to do.

Of course, lots of really good science is being done along with the chaff, but the chaff is too expensive and too integrated into what people in universities, and media people in the real world, are pressured for many different reasons (not least being to earn a living) to do.

Of course, nobody pays any serious attention to grumps like us, even if our points about the science itself were actually sound, as we at least believe them to be. So we and MT (except for Holly's posts) are at worst harmless and perhaps at most, laughable. On the other hand, we do have a suggestion to make that would remedy both the problem of costly overblown trivial science, and our own perhaps overblown criticism:

Of course, nobody pays any serious attention to grumps like us, even if our points about the science itself were actually sound, as we at least believe them to be. So we and MT (except for Holly's posts) are at worst harmless and perhaps at most, laughable. On the other hand, we do have a suggestion to make that would remedy both the problem of costly overblown trivial science, and our own perhaps overblown criticism:We need to establish a category of Entertainment Science, things with trivial, man-bites-dog human-interest, minimal theoretical value, and great telegenic content, and where the profit motive is legitimate rather than corrupting. Government, being duly prudent, should be banned from funding any such science. Instead, there are deeper pockets elsewhere, and people with the appropriate experience, desires, and expertise: Entertainment Science should be paid for by Hollywood.

Tuesday, October 18, 2011

Genes that ensure a long life? Not likely

The genome of a woman who was 115 years old when she died has been sequenced, and according to a report in the BBC (the analysis hasn't yet been published, but the results were reported at the recent Human Genetics meeting in Montreal), the woman was the oldest in the world at the time of her death, and had no signs of dementia, and in fact when tested for cognitive ability, was found to have the thinking facility of a woman in her 60's or 70's.